15

May

Qubits technologies

Quantum computing has emerged as one of the most promising frontiers in computing technology, with the potential to revolutionize fields from cryptography to drug discovery. At the heart of this technological revolution lies the fundamental building block: the qubit. Unlike classical bits that can only be in a state of 0 or 1, qubits can exist in multiple states simultaneously through quantum superposition, enabling exponentially more powerful computations.

However, building reliable qubits presents enormous engineering challenges. Multiple competing technologies have emerged, each with unique approaches to creating and controlling these quantum states. This article explores the various qubit technologies currently being developed and compares their strengths, limitations, and potential for scaling up to practical quantum computers.

In theory, any physical system with two easily distinguishable quantum states can be used as a qubit. At its core, it’s just a matter of assigning the binary values 0 and 1 to two energy levels of a quantum system. While this idea is simple in principle, in practice it is extremely challenging. Quantum states are inherently fragile, and every implementation faces different limitations based on the specific physical system used.

To choose the right type of qubit, several important criteria must be considered:

- Operation speed – how quickly can quantum gates be executed?

- Fidelity – how precise and error-free are these operations?

- Manufacturability – how complex or costly is it to build these qubits?

- Control – how can we manipulate and read the quantum state?

- Scalability – how feasible is it to integrate more qubits on a single chip?

- Error resilience – how robust are the qubits against decoherence and noise?

In short, there are many ways to create qubits that are promising candidates, however, every technology has its perks and weaknesses, and to this day, no implementation has gained the ultimate upper hand. In the figure below, I listed the most pursued approaches, showing why they are believed to be promising for the future of quantum computing via a comparison among some of the most relevant features a QC would need to scale up. That being said, this is a very technical problem (mostly) on the hardware level, and trying to guess which technology will ultimately prevail is an almost impossible task.

Comparison of different qubits technologies highlighting their edges and weaknesses, inspired by Mckinsey.

It’s really difficult to assess which technology is better, not only because of how intricate the hardware is but also because many different aspects make up a “good” or “useful” quantum computer. Many of the straightforward metrics as the qubit count, are useful in some aspects but lack in others; thus, they could give a distorted view of how to compare the technologies. For example, the trapped ion solutions excel in the single qubit fidelity, but they are often regarded as slow in terms of computations. This means that the technology could get to logical qubits sooner but would have much difficulty scaling up and becoming fast enough to run big circuits.

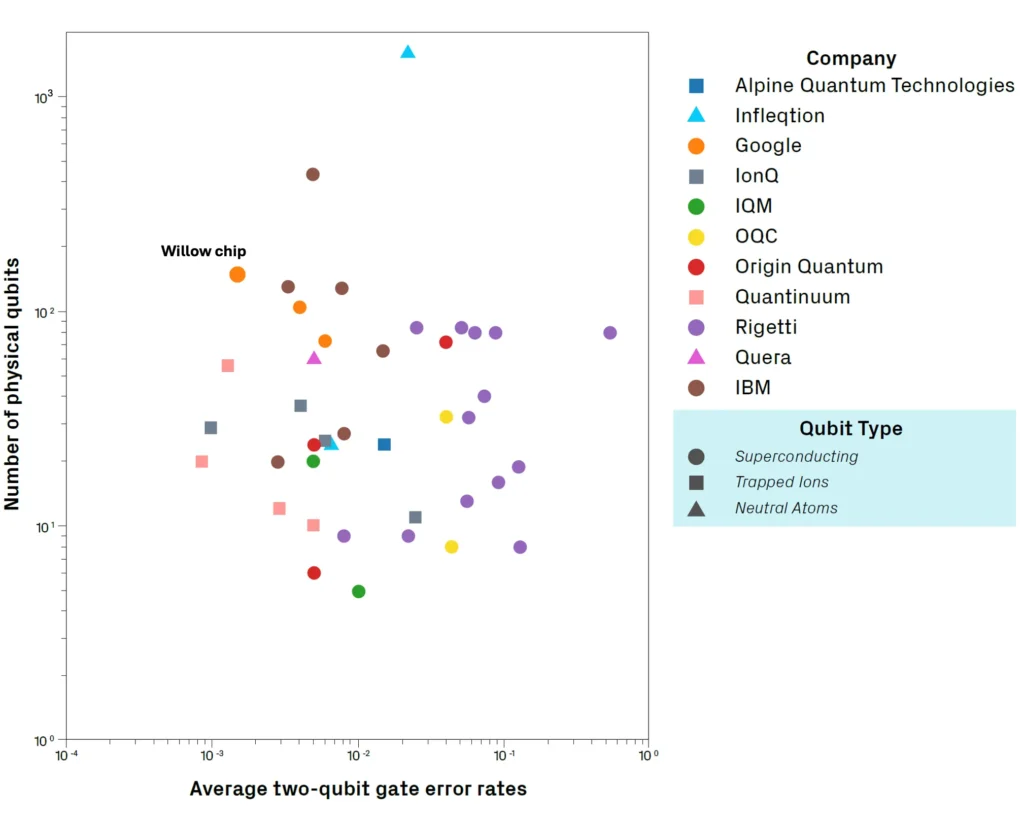

The way out of the Noisy Intermediate Scale Quantum computing era (NISQ) is making bigger and better (in terms of errors) quantum computers. The image below shows the current landscape of qubits technologies across these two metrics. As we can see, there is no such thing as good (top-left) or bad (bottom-right) technology, nor company.

Performance of quantum chips for different technologies and vendors.

To address this complexity, the quantum community is working toward standardized, hardware-agnostic performance metrics. These metrics aim to abstract away from the physical implementation and focus instead on the capabilities of the quantum computer. Some of the most common include:

- Logical Qubit Count: A logical qubit is a high-fidelity, error-corrected unit constructed from multiple physical qubits (typically 100–1000). This metric reflects true computational usefulness.

- Circuit Depth: Measures how many layers of gates can be applied sequentially to a qubit. It’s a proxy for how deep or complex a quantum algorithm can be on a given machine.

- Quantum Volume (QV): Proposed by IBM, QV is defined as 2^d, where d is the largest square-shaped circuit (same number of qubits and layers) a system can run reliably. It combines qubit count, fidelity, connectivity, and more.

- QuOps: Short for “quantum operations,” this metric counts the total number of quantum gates and measurements performed in a circuit.

These metrics are especially useful for comparing different platforms and assessing real-world capabilities. They also help estimate the resources required for advanced tasks—for example, factoring a 2048-bit RSA key using Shor’s algorithm.

| Logical Qubits (N) | Circuit Depth (D) | QuOps (N*D) | |

|---|---|---|---|

| 2048-bit integer RSA | 6’115 | 2.1 Billions | 13 TeraQuOps |

Benchmarking efforts like those by Metriq aim to create a comprehensive public record of performance metrics for different quantum devices and algorithms. This transparency helps track the progress of each technology over time.

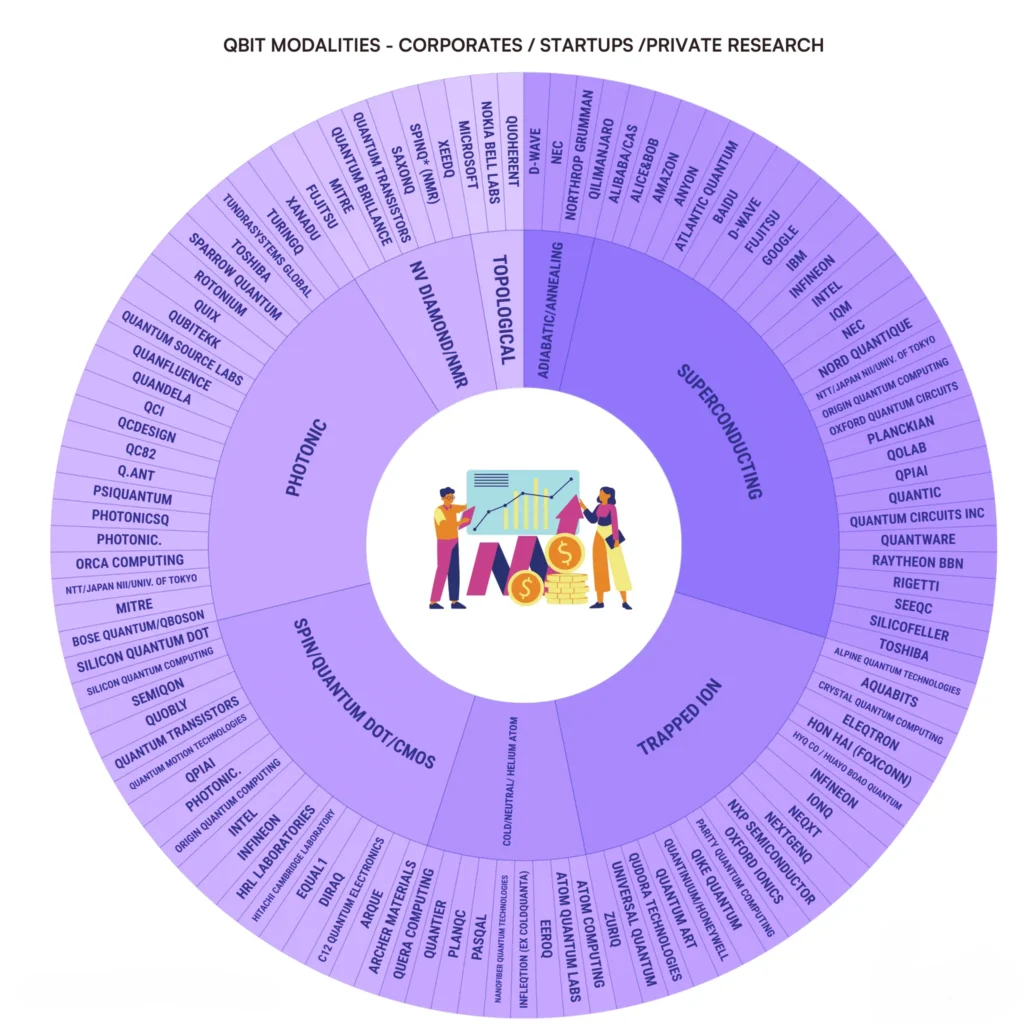

One way to appreciate the trade-offs between different qubit technologies is by looking at which companies are pursuing them. While superconducting qubits currently dominate in terms of investment and maturity, other approaches—such as trapped ions, neutral atoms, photonics, and topological qubits—are rapidly advancing. A notable example is Microsoft’s recent announcement of Majorana 1, a topological qubit chip. Though initially one of the least developed technologies, this breakthrough (despite ongoing debate) has elevated its perceived potential.

The chart below shows current vendors and startups grouped by their chosen qubit technologies. Each one bets on the specific advantages of their platform, suggesting a diversified and competitive field.

Landscape of current vendors/startups divided by qubit technology. Credit to Michel Kurek.

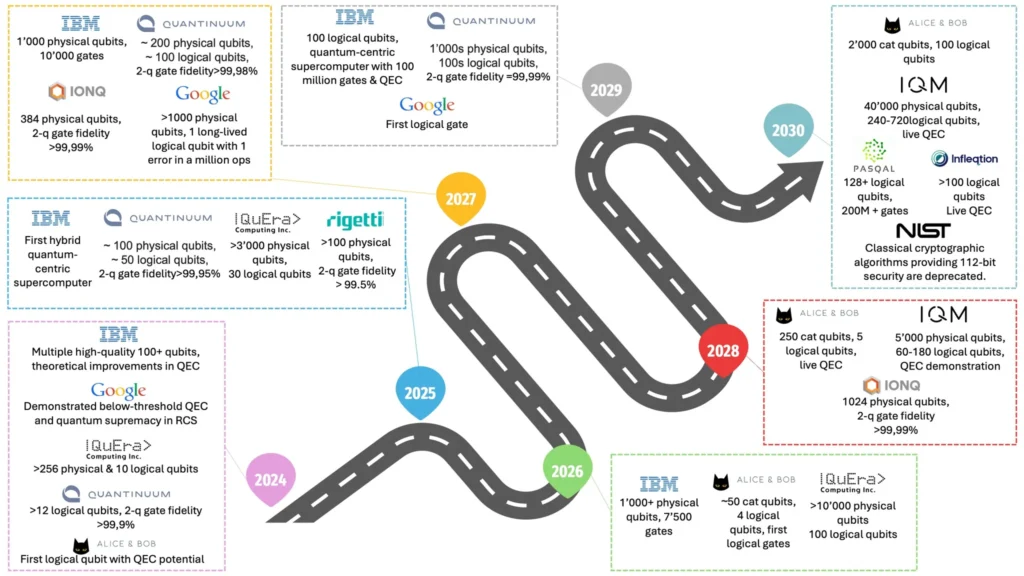

Finally, it is very interesting to see how different technologies are leading to comparable roadmaps. Almost every one of the big players expects to reach the 100 logical qubits milestone in the next 5 years, this is often regarded as the threshold to get to the so-called utility scale quantum computing, i.e., the time in which quantum computing is more powerful than the best classical ones on useful tasks and starts having an economical impact on society.

In the next 5 years, we will probably either see one technology ultimately prevailing or different qubit implementations used for different applications that are best suited for their strengths.

Comprehensive timeline of quantum computing vendors for the next 5 years.

As quantum computing continues to evolve rapidly, staying informed about these competing technologies and their progress is crucial. Whether you’re a researcher, investor, or technology enthusiast, following the development of these different approaches will help you better understand and potentially contribute to the quantum computing landscape. The next step is to dive deeper into specific technologies that align with your interests or industry needs.