27

Sep

Quantum Computing and Environmental Sustainability

The idea that quantum computing could be environmentally sustainable may seem paradoxical at first. After all, these machines operate under extreme laboratory conditions, often requiring temperatures colder than outer space, with carefully isolated environments and highly specialized hardware. Yet, despite their energy-intensive setup, there is growing discourse around quantum computers as a sustainable alternative to traditional high-performance computing (HPC).

To properly evaluate this claim, it is essential to look beyond surface-level comparisons and assess three critical layers: computational efficiency, transformative applications (particularly in chemistry), and fundamental physical principles.

Layer 1: Computational Speedups

The first and perhaps most intuitive reason behind the potential environmental benefit of quantum computing lies in its capacity to solve certain problems (sometimes exponentially) faster than classical computers. This comparison typically focuses on computational performance where quantum computing’s exponential scaling capability makes previously intractable problems solvable within reasonable timeframes.

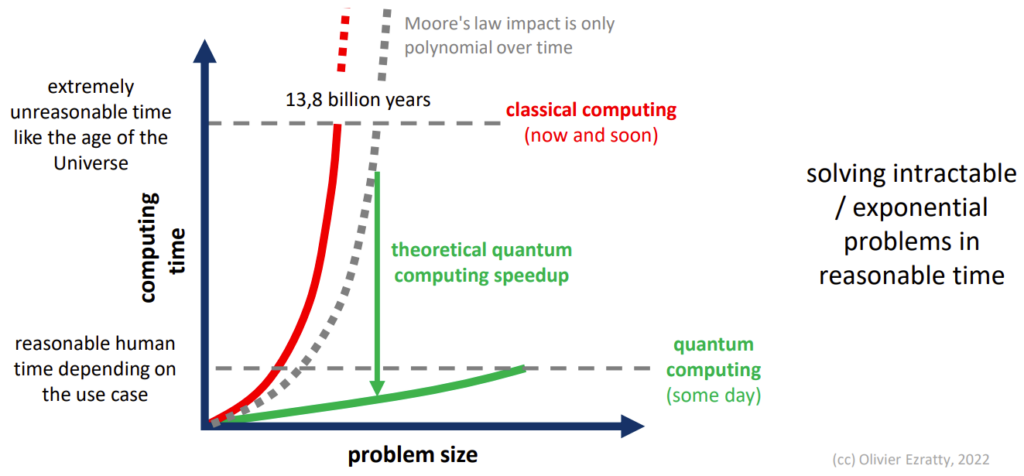

The image below illustrates how computation time scales with problem size. Classical computing time (shown in red) scales exponentially for many industrially-relevant problems, making them effectively impossible to solve as the problem size increases. In contrast, quantum computing offers a theoretical advantage for certain problems by solving them in polynomial time (green line).

Comparison of the time taken to compute a solution (y-axis) depending on the problem size (x-axis).

But even focusing on problems that can be computed on classical computers today (below the “reasonable human time depending on the use case” line in the image) we can start to do a comparison between quantum and classical machines in terms of energy consumption.

Quantum computers are often compared with everyday PCs in popular descriptions, but a proper comparison must be with high‑performance computing systems (supercomputers)—the real competitors for large, complex scientific workloads that consume immense energy .

Consider the Frontier supercomputer, one of the most powerful machines in existence: it consumes approximately 21 megawatts of power per year, equivalent to the consumption of a small town, and translating to more than $23 million in annual energy costs. Similarly, the Setonix supercomputer in Australia requires around 477 kilowatts of continuous energy—comparable to the average consumption of about 400 households. These systems represent the computational backbone for scientific research but come with a hefty environmental price tag.

In contrast, quantum computers—even today’s early-stage prototypes—consume dramatically less power. Most operational quantum machines use between 7 and 25 kilowatts, an amount roughly equivalent to what a single household or small office might consume. Even if their performance today is not comparable to what HPCs do, a modeling by Oak Ridge National Laboratory showed potential energy reductions of over 1 million kWh compared to classical supercomputers in the future—even before quantum machines reach full maturity.

Hence, once the “quantum advantage” threshold is achieved, quantum computing may offer compelling energy savings, especially for large-scale or repeated computations.

Layer 2: Chemistry Simulation with Great Environmental Impact

Beyond efficiency, quantum computers offer a unique computational paradigm inherently suited to simulate the natural world. Chemistry, material science, and molecular biology often deal with quantum systems, and modeling them accurately with classical computers is practically impossible for larger molecules.

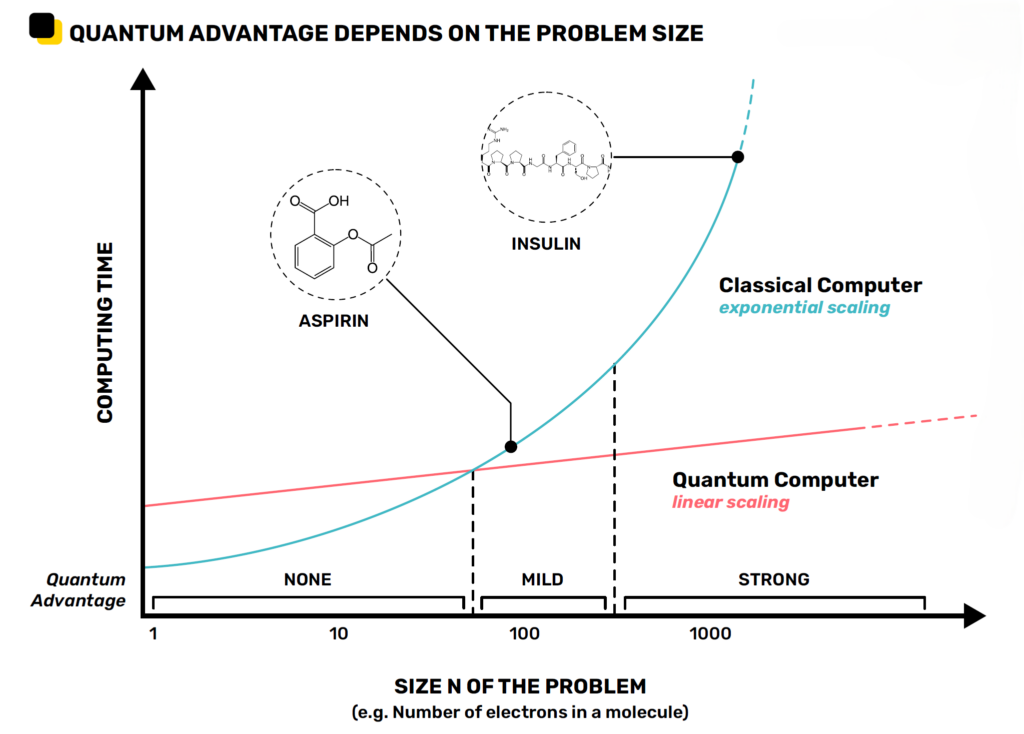

In the image below there is represented the previously cited difference in scaling between quantum and classical computing with a focus on chemical problems.

Modeling molecules with 100 or more components exceeds the capabilities of today’s most powerful high-performance computers, yet, unfortunately, the most interesting problems would require simulating molecules that are at least ten times larger.

Same graph as above focused on chemical problems showing example of molecules for certain sizes. (cc) Alice & Bob.

A prime example of the abovementioned problem is the Haber-Bosch process for ammonia synthesis. This process alone represents 2% of total global final energy consumption, primarily due to its inefficiency and reliance on fossil fuels. Scientists have long sought a more sustainable alternative, but simulating the necessary quantum-level interactions of nitrogen and hydrogen molecules is beyond the capability of classical systems.

A quantum computer could find a way to synthesize ammonia more efficiently or produce new materials, making an enormous impact on the environmental issues.

Ammonia is just one of the easiest examples to consider, but once quantum computers unlock the possibility of simulating large and complex molecules, numerous interesting applications emerge.

One problem that could come up as promising candidate for quantum computing research is an enzyme known as Rubisco (Ribulose-1,5-bisphosphate carboxylase/oxygenase). This enzyme, the most abundant on Earth, is essential for CO₂ fixation during photosynthesis in plants. Despite its importance, Rubisco is notoriously slow and inefficient. A quantum computer could simulate Rubisco’s complex mechanism and active site with unprecedented detail, helping researchers understand how to modify its structure to make it significantly faster and more efficient at absorbing CO₂.

Layer 3: Reversibility and Energy Dissipation

This third and final layer relates to the physics of quantum computing, and thus presents a more complex conceptual framework.

Classical computers generate heat during intensive operations due to fundamental physical processes. The primary reason for this heat generation is that information bits are physically represented by electrical current, which dissipates heat as it flows through resistive conductors—a phenomenon known as the Joule effect.

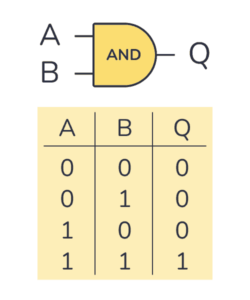

A secondary, less-documented reason pertains to information theory itself. In order to execute operations and produce outputs from binary data stored in computing systems, information must be processed through logical gates. The AND gate represents a fundamental example of such a logical structure, producing an output of 1 (TRUE) exclusively when both inputs are 1, and 0 (FALSE) in all other instances.

AND gate and its truth table

One thing that is hard to notice but is fundamental for our reasoning is that the information that is available at the input (i.e., A being 0 or 1 and B being 0 or 1) is more than the information that is available at the output, once the process is over (Q being 0 or 1).

Consider this example: if one could only observe the output Q and receive 0, it would be impossible to determine which of the first three input combinations generated that result. Additionally, it would not be feasible to design a gate that accepts Q and reconstructs the original inputs A and B. Therefore, the process is irreversible, and information is inherently lost.

The question arises: what is the relationship between this phenomenon and energy dissipation?

The process of erasing information must lead to a proportional increase in entropy, resulting in heat dissipated by the system. This is known as the Landauer principle.

\(\)

Landauer’s principle states that the minimum energy needed to erase one bit of information is proportional to the temperature at which the system is operating. Specifically:

\[ E \geq k_B T \ln 2 \]

where \(k_B\) is the Boltzmann constant and \(T\) is the temperature in Kelvin.

At room temperature (\(T \approx 300\,K\)):

\[ E \approx 2.9 \times 10^{-21}\ J \;\; (0.018\ \text{eV}) \]

Landauer’s principle is thus setting about a lower theoretical limit of energy consumption of computation. It holds that an irreversible change in information stored in a computer, such as merging two computational paths, dissipates a minimum amount of heat to its surroundings.

Although the heat dissipated by this principle is much smaller than other sources (like the Joule effect and imperfections), it still establishes a fundamental limit on the efficiency of classical computation.

Gate-based quantum computing, on the other hand, is a reversible computing paradigm. This means that any operation on a qubit can also be applied backward, ultimately reverting to the initial value.

This reversibility can be traced back to quantum computing probabilistic nature. In fact, a qubit, the unit of information for a quantum computer, can be written as:

This means that any operation on a qubit state, which changes its amplitudes and probabilities, must preserve the total sum of alpha and beta squared. Gates implement ****this constraint mathematically as matrices that perform only unitary transformations.

Finally, one key feature of unitary matrices or transformations is that they are always reversible. Hence, following Landauer’s principle, quantum computing does not have a minimum of dissipated energy per operation.

In theory, one could consume energy to bring the quantum computers to the right conditions, but the following operations and calculations could come at zero heat dissipation.

This third layer is less intuitive and, while quantum computers are still in their infancy, less practical. However, it offers a glimpse into a future where quantum computing could be both powerful and environmentally sustainable, not just because of its applications but due to its fundamental physical properties. This opens up exciting possibilities for green computing at scale, where the environmental cost of calculations could become negligible compared to today’s standards.